News & Trends

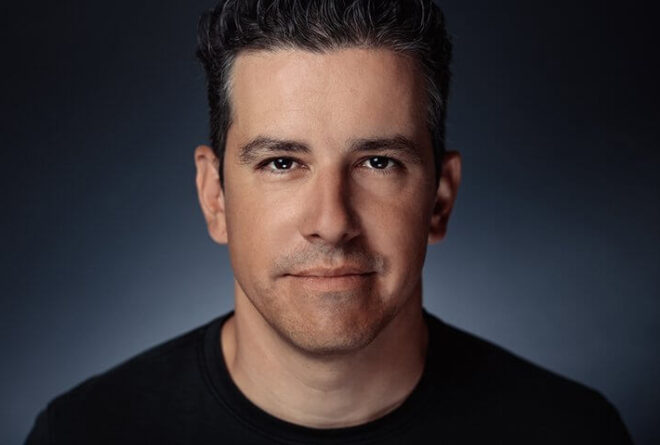

Google AI Expert Yariv Adan on AI and the future

THE TOPIC OF ARTIFICIAL INTELLIGENCE (AI) IS ON EVERYONE’S LIPS, but while many of us use the technology, few understand its true meaning and potential. Yariv Adan, Senior Director of Product for Conversational AI in Google Cloud, gives us a deep dive into the world of AI. From its role in our daily lives, to the responsibilities of companies like Google, to exciting innovations that await us in the coming years.

We Have a Responsibility for the Technologies We Create.”

The topic of artificial intelligence is currently omnipresent. In society, there is not only great interest but also scepticism – and sometimes even fear. What is your take on this?

While AI’s presence in popular media and conversations has recently skyrocketed, we should remember AI didn’t emerge in the last year – it has been serving us broadly and reliably for quite some time now.

In 2016, Google established itself as an AI first company, and we have integrated it responsibly across our product suite ever since. Every time you search on Google Search, navigate on Google Maps, take a photo on Android or edit a photo in Google Photos, you are benefiting from AI. Google Assistant, Google Lens, and Google Translate are all AI first applications with magical experiences that are used by hundreds of millions of people every day.

It’s definitely an exciting time in the development and adoption of Generative AI, and we’re excited about the transformational power of the technology. However, we also understand that AI, as a fast-moving and a still-emerging technology, poses evolving complexities and risks. At Google, our development and use of AI must address these risks. That’s why we believe that responsible AI development is so important, and it’s also why we launched our AI Principles. Google is committed to leading and setting the standard in developing and implementing useful and beneficial applications, applying ethical principles, and evolving our approaches as we learn from research, experience, users, and the wider community.

Google’s AI Principles

Google’s AI Principles establish an ethical framework for AI development, with a focus on societal benefit, safety, and transparency. They commit to minimizing bias and avoiding certain harmful applications. For detailed information, you can view Google’s AI Principles.

Is there a generally valid definition of artificial intelligence?

I don’t think there is a broadly agreed precise definition. At an intuitive high level, I like the original and somewhat circular and tautological definition coined by John McCarthy in 1955: “The science and engineering of making intelligent machines.” The question then is, what is “intelligence” considered to be? There are mostly two approaches to that:

1. Emphasising learning: the ability to learn and perform suitable techniques to solve new and unexpected classes of problems and goals, appropriate to the context in an uncertain, ever-varying world. A fully pre-programmed factory robot is flexible, accurate, and consistent but not intelligent.

2. Emphasising human-like intelligence: the creation of computer systems capable of performing tasks that historically only a human could do, such as reasoning, making decisions, or solving problems. These tasks and problems vary greatly. Some examples of such tasks include: playing video and strategic games, driving cars, writing code, advanced robotics, visual understanding, developing science, telling jokes and writing poems, generating images and videos, and most recently, communicating and interacting using natural human language.

Both answers are exciting and inspiring.

We are working

on standards

for responsible

use of AI.

Which areas of life will be most influenced by AI in the coming years?

I share the view that AI will become as foundational as electricity. So I don’t think it will be narrowed to specific areas, but quite the opposite.

Personally, I am excited about the “AI for good” opportunities where AI can make our world a better place. Both on a personal level, where AI can help improve personal health, safety, and physical and emotional well-being, as well as on a global societal level where AI can be used to help address problems like climate and environmental challenges, democratising global access to expert resources across education, health, information and services, advanced science, and more.

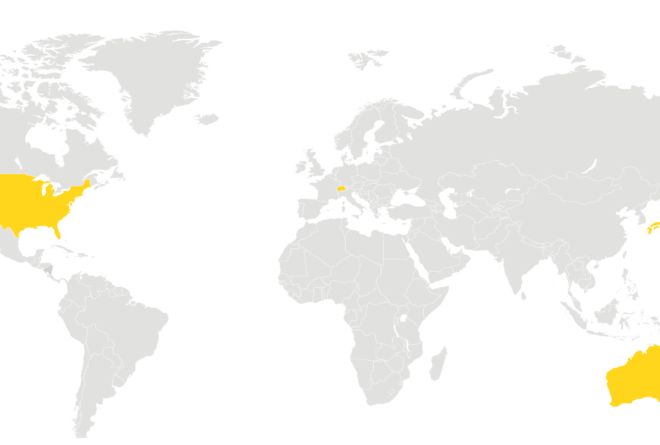

I’m especially excited because there are already exciting promising projects in these areas. For example, as part of the AI for the Global Goals initiative:

● Google’s Global Flood Forecasting ML Model uses multiple data sources to develop flood forecasts up to 7 days in advance of a flood.

● Rainforest Connection (RFCx) develops acoustic monitoring technology that uses AI to protect vulnerable ecosystems by detecting threats like illegal logging in real-time.

● Wadhwani AI created an AI-powered early warning system that helps farmers protect their crops by determining the right time to spray pesticides through immediate, localised advice.

Google has also been intensively involved with artificial intelligence for many years. What responsibility does Google bear here as a company?

Google understands that how AI technology is developed and used will have a significant impact on society for many years to come and that we have a responsibility for the technologies we create. Because of this, in 2018 we launched our AI Principles, which are company-wide. Every new product and customer engagement is reviewed through the lens of our AI Principles.

We believe it’s critical to embrace the tension between boldness and responsibility.”

AI holds great (conflict) potential. How can AI be part of the solution rather than part of the problem in the future?

We believe our approach to AI must be both bold and responsible. To us that means developing AI in a way that maximises the positive benefits to society while addressing the challenges, guided by our AI Principles. While there is natural tension between the two, we believe it’s possible – and, in fact, critical – to embrace that tension productively. The only way to be truly bold in the long-term is to be responsible from the start.

We also know this isn’t something any single company can do in isolation. Building AI responsibly must be a collective effort involving researchers, social scientists, industry experts, governments, creators, publishers and people using AI in their daily lives. We’re committed to sharing our innovations with others to increase impact.

In connection with AI, the term “technological singularity” is often brought up. It refers to the point in time when machines controlled by artificial intelligence are able to continuously improve themselves to such an extent that they are beyond the control of human hands. Can you and your colleagues already foresee in which direction it will develop?

We can’t tell the future, but what we can control right now is how we as a company approach developing and releasing our AI offerings. Google believes that companies can’t just build advanced technologies and disown responsibility for how they get used. While governments work to set the boundaries, ultimately companies are on the frontline and have a critical role to play.

We develop our AI solutions according to Google’s AI Principles in order to give developers a responsible AI foundation to start from. We know that control is necessary so developers can define and enforce responsibility and safety in the context of their own applications. The strength of our technology and our ability to help our customers be successful with it is only possible if we can do so responsibly.

AI tools like Bard can already write texts, take over administrative tasks and much more. But can they also support us in research? Are there already examples where AI is supporting ground-breaking developments and making a positive contribution?

We make contributions across many fields that help to address the world’s most critical challenges and benefit society, whether it’s physics, material science, or healthcare. AlphaFold from Google DeepMind is one such project: AlphaFold can accurately predict 3D models of protein structures and is accelerating research in nearly every field of biology, including the work to tackle antibiotic resistance, fight malaria, and develop drugs for neglected diseases. In partnership with EMBL’s European Bioinformatics Institute, the AlphaFold Protein Structure Database freely shares predictions for over 200 million proteins.

We also empower other organisations, businesses and governments, through Google Cloud Vertex AI to power their use cases. For example:

● American Cancer Society is using Google Cloud’s AI and ML capabilities to identify novel patterns in digital pathology images, enhancing the quality and accuracy of image analysis by removing human limitations, fatigue, and bias.

● Johns Hopkins University BIOS Division uses Google Cloud technology to reduce research and infrastructure costs, make trial core laboratory functions a real-time capability, and enables integration of multiple small fragments using Healthcare API.

In terms of future generations and their education, what advice would you give to today’s school leaders regarding AI?

As a foundational technology, AI should be a key part of the educational curriculum, and children at all levels should develop an understanding of it.

Through understanding, kids will develop tools to tell FUD from fact and make them more responsible users who know how to best use the technology for good, while also keeping in mind its weaknesses and risks.

It’s critical that as kids become young adults, they have the knowledge and tools to take part in an informed discussion on how to integrate AI in our daily life as a society, and hopefully they will build the next generation of AI to be even more useful and safer.

AI is also a great tool for augmenting and improving education:

● Using translation, high-quality materials can be made available to everyone.

● Using generative capabilities and the ability to understand students’ questions, AI can take the existing curriculum and make it personalised, explaining material to students in a way they best understand, answer questions, generate quizzes, and even generate images and videos.

We should think of AI in this capacity as another tool or an advanced assistant, similar to using a calculator for solving a mathematical equation. AI can help students go further faster, but it shouldn’t be feared as a disruptor to education.

Artificial intelligence is supposed to make our everyday lives easier. Does the use of AI also create opportunities for people with age-related and/or physical limitations?

Google believes AI has the potential to improve health on a planetary scale and bring to life our mission of billions of healthier people. Google is already making advancements with:

● Google’s ARDA tool screens 500+ patients a day for diabetic retinopathy, the leading cause of blindness.

● Google’s partnership with Northwestern Medicine, in a first-of-its-kind clinical study, explores if AI models can reduce the time to diagnosis, narrow the assessment gap, and improve the patient experience.

● In communities where caregivers are in short supply, Google’s AI model automates the detection of tuberculosis and expedites treatment.

● Google is also exploring the potential of our state-of-the-art medical Large Language Model, Med-PaLM 2, to assist healthcare professionals and improve patient outcomes.

● We’ve also previously licensed our mammography AI research model for real-world clinical practice with iCAD, a leader in medical technology and cancer detection.

● Google has also announced a deal with Aidence to help improve lung cancer screening with AI, and entered a partnership with PacBio, a developer of genome sequencing instruments, to further advance genomic technologies.

On a more personal note, as a person with a disability, who also worked on accessible products, I can confidently say YES! (I have a paralysed right arm due to a motorcycle accident.) Voice-activated products are a life-changing technology for many people. One of my top favourite projects is Euphonia – a special AI model from Google Research that has been trained to provide speech recognition for people with impaired speech. This has been especially life-changing for people with ALS, who suffer from both speech and mobility issues. Combined with the Google Assistant, the system can repeat the user’s command in a “regular” voice, allowing them to use their voice to control their home environment, devices, and communicate more easily. This setup powered by AI, has allowed them daily independence that would otherwise be impossible.

I have personally been involved in projects for the elderly, people with chronic conditions, preventive care, exoskeletons, and more. I am a huge believer in the potential of this technology to do good in this space.

Google is always surprising us with something new. What innovations can we expect from Google in the coming years?

I am actually very excited about our efforts with Google Cloud’s Vertex AI to empower others to innovate with AI, at a scale and with a diversity we couldn’t achieve by ourselves. Vertex AI democratises AI, far beyond data scientists, enabling a much larger range of users such as developers and business users to also take advantage of AI. These businesses and organisations have a deep understanding of real-world challenges and opportunities where AI can be a game changer, across a wide set of industries and domains.

We make sure they have access to the latest and greatest AI technology, from both Google and the broader ecosystem (we support third-party and open-source models), in a way that allows them to quickly and efficiently build safe, scalable, enterprise grade experiences, while “hiding” many of the complexities involved.

So this partnership, where Google is an AI platform technology provider, not “just” a solutions provider, excites me most. Better together.

Google has set itself the goal of being climate-neutral by 2030, but it consumes an immense amount of power, which makes high server capacity necessary. This will be exacerbated by AI development. How realistic is the target in this context?

While data centres now power more applications for more people than ever before, gains in efficiency have seen the share of global electricity consumption remain constant at about 1% since 2010. Google’s data centres are designed, built, and operated to maximise the efficient use of resources. We strive to ensure each data centre accelerates the transition to renewable energy and low or zero-carbon solutions.

Who is Yariv Adan?

Yariv has been leading product teams at Google since 2007. In his current role, Yariv is the Sr. Director of Product for Conversational AI in Google Cloud. Before that, he was one of the founders of the Google Assistant and Google Lens, the lead PM for privacy and security, built and led the Emerging Markets product team, and worked on YouTube monetisation. Before joining Google, Yariv spent 10 years as an engineering manager in various Israeli start-ups and companies.

Deepen your knowledge of AI and its impact on investment strategies.